Google has officially announced the launch of Gemini 3 Flash, its latest AI model designed with speed and cost efficiency in mind. Built on the foundation of Gemini 3, which debuted just a month earlier, this release represents a strategic move by Google to strengthen its position against OpenAI. Gemini 3 Flash has been positioned as the default model for users of the Gemini app worldwide, as well as for AI powered search systems, with the goal of delivering faster and more responsive user experiences.

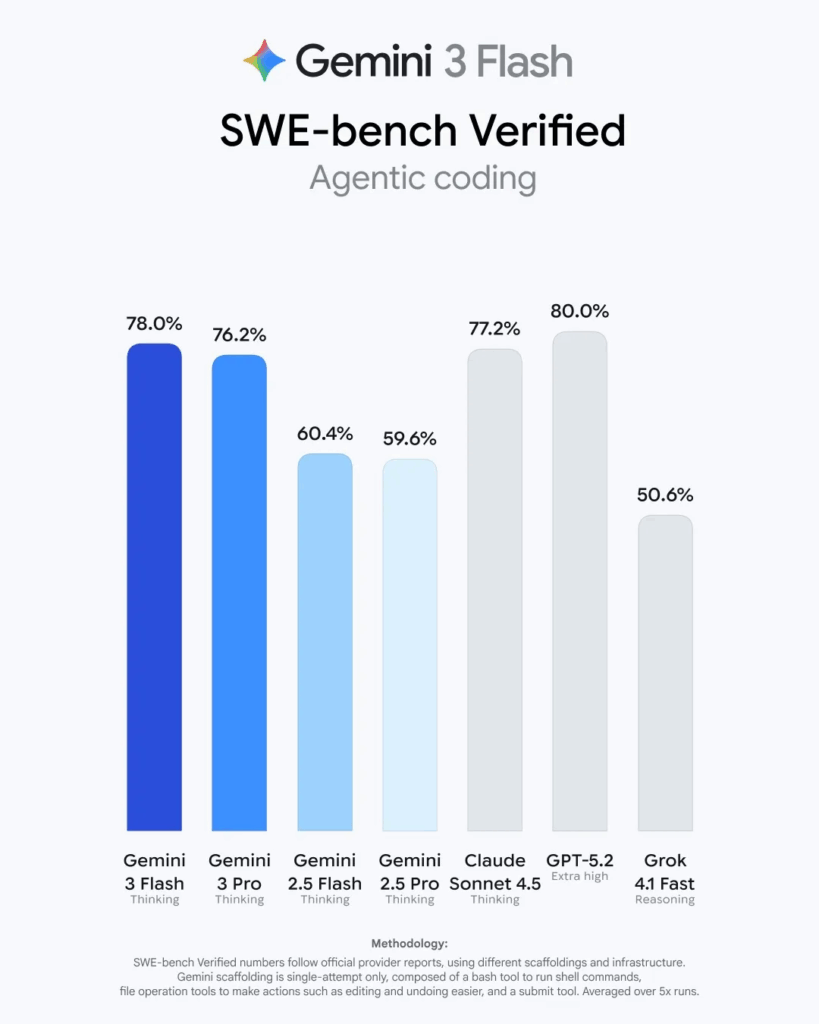

Despite arriving only six months after Gemini 2.5 Flash, Gemini 3 Flash demonstrates a significant leap forward in performance. Early benchmark results suggest that the model performs on par with higher tier offerings such as Gemini 3 Pro and strong competitors like GPT 5.2 across multiple dimensions. On Humanity’s Last Exam, conducted without external tools, Gemini 3 Flash achieved a score of 33.7 percent, closely trailing GPT 5.2, which recorded 34.5 percent.

In multimodal performance, Gemini 3 Flash has delivered standout results. On the MMMU Pro benchmark, the model reached a score of 81.2 percent, placing it among the top performers in the industry. These results highlight its ability to understand and analyze complex inputs, including images, audio, and text, with speed and accuracy that meet modern user expectations.

For everyday users, Gemini 3 Flash is now serving as the primary assistant within the Gemini app globally. The model has been optimized to better understand user intent and generate responses in a variety of formats, including structured tables and visual elements. It also supports advanced file analysis, allowing users to upload videos, audio clips, or even hand drawn sketches to receive guidance or rapidly build application prototypes.

From a developer and enterprise perspective, major brands such as JetBrains, Figma, Cursor, and Harvey have already begun integrating Gemini 3 Flash through Vertex AI and Gemini Enterprise. The API pricing has been set at 0.50 USD per one million input tokens and 3.00 USD per one million output tokens. While slightly higher than previous versions, the increased cost is positioned as a trade off for faster processing speeds and more intelligent outputs.

Competition in the AI landscape continues to intensify, with reports suggesting heightened internal urgency among rival organizations following Google’s rapid advancements. Google has emphasized that this competitive environment serves as a powerful driver for innovation, pushing AI technology beyond previous limits and toward systems that are more capable, helpful, and aligned with real world human needs.

Source: TechCrunch