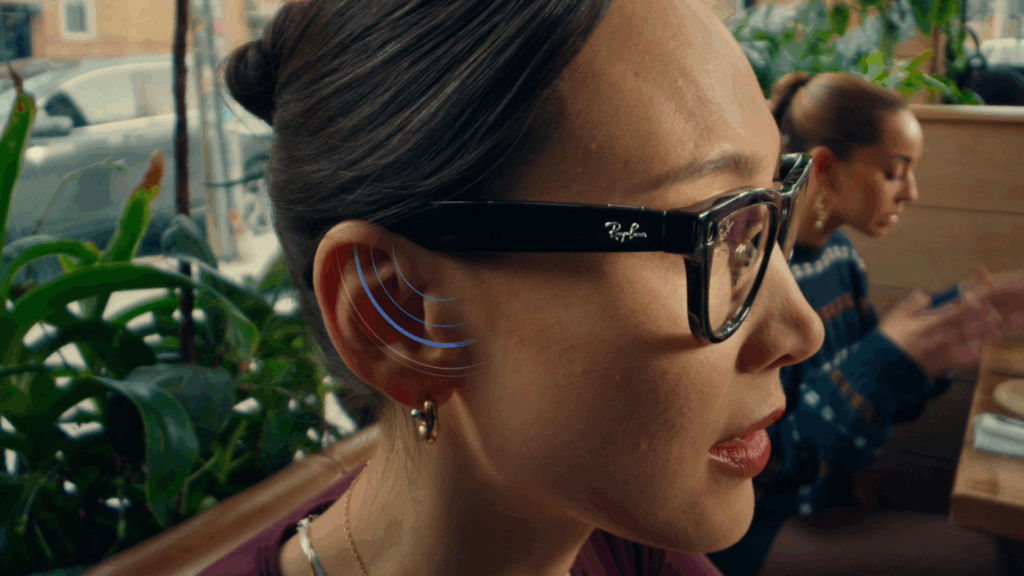

Meta has released software update v21 for its AI glasses, introducing a key feature that improves conversation clarity in noisy environments. This feature will initially roll out on Ray-Ban Meta and Oakley Meta HSTN glasses in the US and Canada. Users can now focus on the voice of the person in front of them, even in crowded restaurants or on trains.

Conversation Focus

The highlight of the update is the Conversation Focus system, first introduced at Meta Connect earlier this year. The glasses use directional microphones and open-ear speakers to amplify the voice of the person directly in front of the user while filtering out surrounding noise. This enables smooth, clear conversations in bars, clubs, or transit stations.

Users can easily adjust the volume of the amplified voice by swiping on the right temple of the glasses or through the app menu. Meta positions this as an important step in using AI as a practical hearing assist device for everyday life.

Spotify Integration

Update v21 also enhances entertainment with Spotify integration through multimodal AI. Users can say, “Hey Meta, play a song to match this view,” and the glasses will analyze the surroundings via the camera and play a Spotify playlist that matches the environment.

For example, if looking at an album cover or a decorated Christmas tree, the glasses will select a playlist that complements the visual context. This seamless combination of reality and AI-powered entertainment marks an early step toward context-aware user experiences.

The Spotify feature is available in English across multiple countries, while the Conversation Focus mode will first roll out to Early Access participants before expanding to the general user base. This update closes out 2025 by bringing Meta’s AI glasses closer to being a practical everyday companion.

Source: Techcrunch